How to use labeling consensus to get accurate training data

Consensus with detailed reports allows you to monitor and prevent systematic inconsistencies during the labeling process

Table of Contents

What is consensus labeling?

Consensus labeling is an annotation approach when multiple annotators collaboratively label the same set of images independently and the resulting annotation is based on combining labels with one of the consensus algorithms like, for example, the well-known majority voting. This approach is not limited to specific computer vision tasks and can be used for object detection, semantic and instance segmentation, classification and others.

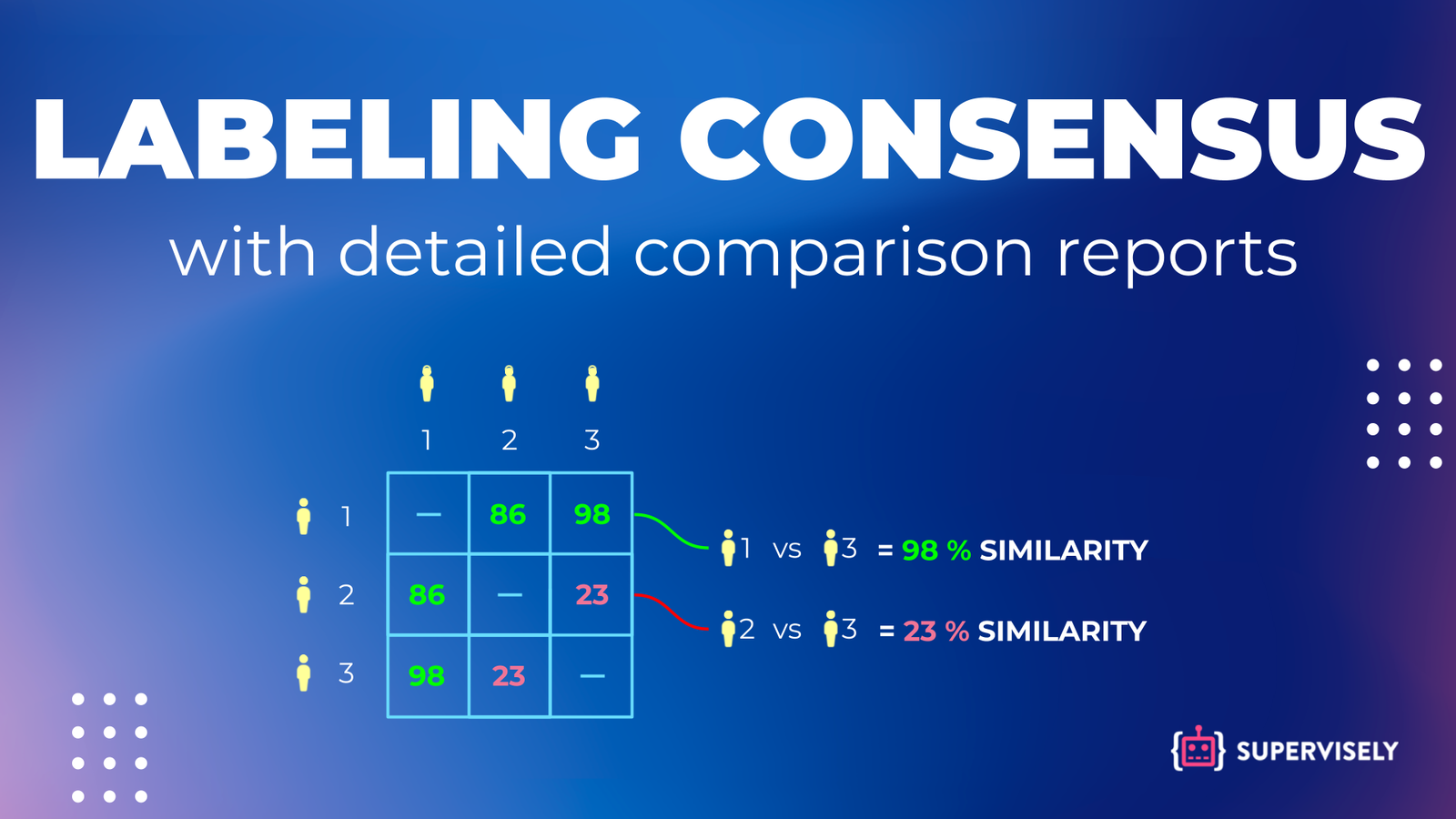

Traditionally all annotators are compared with each other and the consensus scores are calculated for every possible pair of labelers. These consensus scores measure the agreement between labels and show how similar different annotations are. This metric can have any value between 0 % to 100 %. In case there are 2 completely different annotations the consensus for an image would be 0 %. If annotations are identical - the score would be 100 %. The higher values of the score show that annotations are more similar.

Why consensus labeling is important?

Individual labelers often exhibit high variance in annotation accuracy and might have a bit different understanding of the annotation requirements and guidelines. Labeling consensus tools help to monitor such inconsistencies at early stages, evaluate and manage the labeling team and find the most accurate workers for your task.

Also sometimes it is hard to define the right annotation requirements for your task in advance. In contrast to other tools annotation consensus app in Supervisely provides a detailed report for every consensus score that will help you to dive deep into the image-level differences and get valuable insights about the real nature of the annotation inconsistencies. In addition to the benefits described above, these insights allow you to improve annotation guidelines and requirements with complementary clarifications on edge cases and outliers.

How to use a consensus labeling tool?

In this tutorial, you will learn how to use the labeling consensus application in the Supervisely platform for quality analysis of annotations made by different labelers in your team to create high-quality and accurate training data. This collaboration tool is especially helpful in the scenario when multiple annotators perform labeling of the same set of images with the same classes and tags.

Key features of consensus labeling tools:

-

Calculates the consensus score between all annotators in the form of the confusion matrix

-

Works with all types of annotations: bounding boxes, masks, polygons, and even image and object tags

-

Provides a detailed report for every consensus score to compare both high-level quality metrics and image-level pixel differences for all images labeled by the pair of annotators

-

Allows monitoring consensus in real-time at any stage of the labeling process even if it is not finished

Here is the video tutorial that explains how you can easily use the labeling consensus tool in the Supervisely platform.

Example from my experience: defects inspection

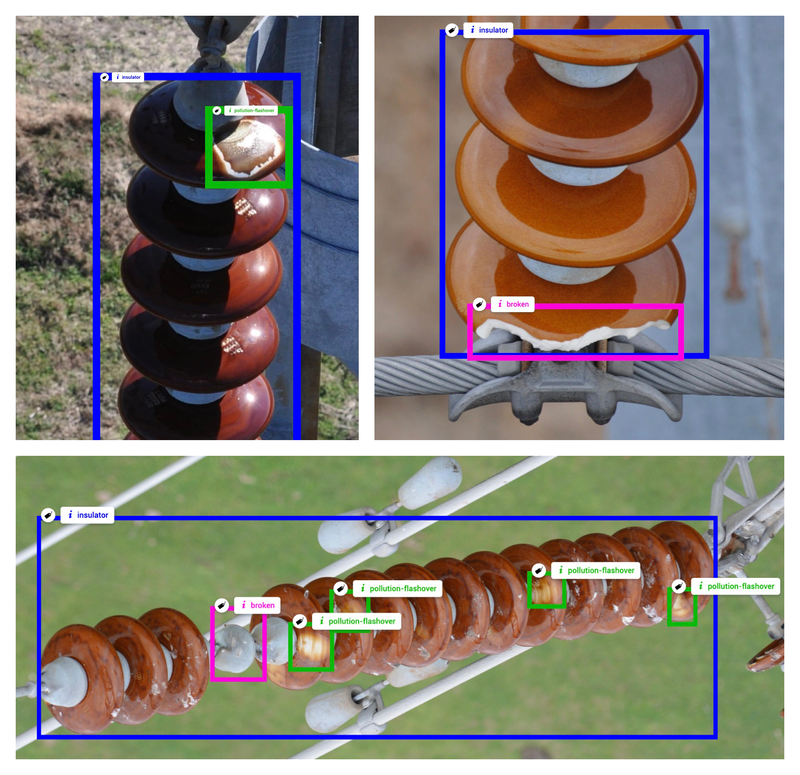

Let's consider the following use case from the industry inspection domain. 3 annotators label images captured from drones with 3 different classes with bounding boxes. The target object is an insulator with two types of defects pollution flashover and broken parts on them. Demo data for this blog post is taken from the Insulator-Defect Detection dataset.

Examples of images labeled with three classes of objects: insulator, pollution flashover, broken.

Examples of images labeled with three classes of objects: insulator, pollution flashover, broken.

How to set up simultaneous labeling at scale

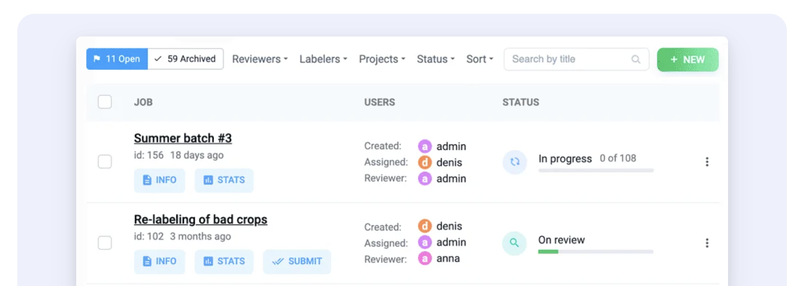

Supervisely platform offers labeling jobs functionality as a part of collaboration at scale module that allows managers to create annotation jobs and organize smooth communication of even hundreds of members in your labeling team. Details on how to set up and organize the labeling process can be found in the corresponding section of your 📹 full video course.

Example of labeling jobs dashboard with real-time statuses to monitor the whole labeling process efficiently

Example of labeling jobs dashboard with real-time statuses to monitor the whole labeling process efficiently

Labeling consensus app

Labeling Consensus

Compare annotations of multiple labelers

During the annotation process, we want to perform quality assurance and measure the consistency and similarity between the work of labelers (consensus scores).

The consensus app can be started from the context menu of a working project. Also, you can run it directly from the Supervisely Ecosystem, but in that case, you should select a working project at the first step of the app.

Then you will see the confusion matrix with labeling consensus scores for every pair of annotators working on the selected project. The app automatically checks and matches labeled images, objects, classes and tags and performs the calculation of consensus metrics only for the intersection. Thus you can run it at any time in the annotation workflow to monitor the actual state of the consensus quickly.

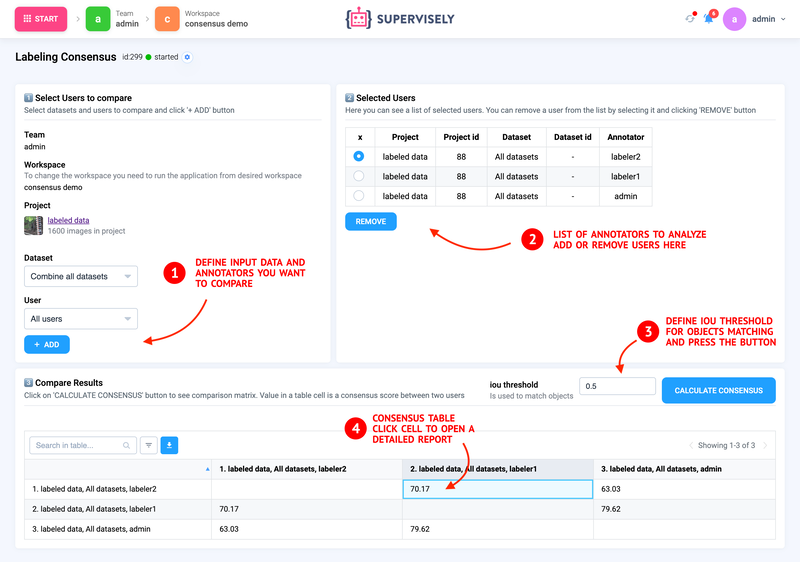

Example of the Labeling consensus app - settings and consensus table

Example of the Labeling consensus app - settings and consensus table

Detailed consensus report

Let's consider the annotation consensus score is 83% for a pair of labelers. What does that mean? This consensus metric shows that the annotations made by the two users are 83% similar. That high-level information is very helpful, but it is not enough for a better understanding of the edge cases where and why the annotations are different.

For that purpose, our consensus labeling tool has detailed consensus reports for every calculated score. Such reports allow us to get insights and dive deep into a qualitative analysis of data used in metric calculations.

To open the detailed annotation consensus report with the comparison of annotations, you just need to click the consensus score.

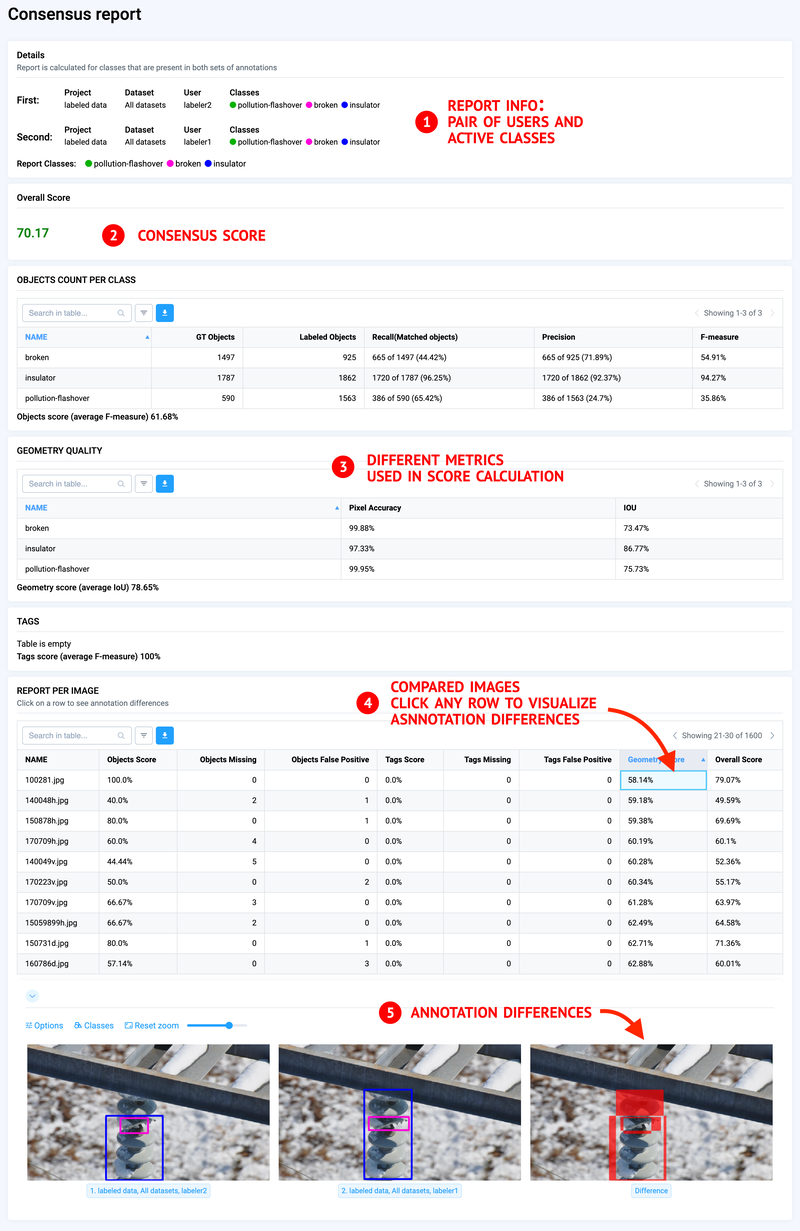

Example of consensus report for the selected pair of annotators

Example of consensus report for the selected pair of annotators

Customization

The labeling consensus tool is just the 🌎 open-sourced app written in 🐍 Python like most of the apps in Supervisely Ecosystem made by our team. To make your custom 🔒 private app you need to fork the original repo and modify it for your specific use case. For example, you may want to add some actions on top of consensus voting or image-level reports, like removing all unmatched annotations or only keeping the labels based on the majority of "votes".

To make tailored applications with user-friendly Graphical User Interface (GUI) you do not need to have some special knowledge in web technologies. Supervisely App Engine was designed for python developers and data scientists. Just install Supervisely pip package and follow the guides in our developer portal.

Conclusion

Managing consensus in your annotation team is just a matter of running the app on a working project. The app calculates consensus (similarity) scores and for every score produces a detailed report that includes complete objects comparison and differences in annotations for every image.

Such instruments help to perform annotation quality assurance and quickly find errors and inconsistencies in your labeling team. Thus annotation manager can guarantee accurate high-quality training data.

Supervisely for Computer Vision

Supervisely is online and on-premise platform that helps researchers and companies to build computer vision solutions. We cover the entire development pipeline: from data labeling of images, videos and 3D to model training.

The big difference from other products is that Supervisely is built like an OS with countless Supervisely Apps — interactive web-tools running in your browser, yet powered by Python. This allows to integrate all those awesome open-source machine learning tools and neural networks, enhance them with user interface and let everyone run them with a single click.

You can order a demo or try it yourself for free on our Community Edition — no credit card needed!